GOAT-7B-Community model is the SOTA among the open-source 7B models

We introduce GOAT-7B-Community model, a model fine-tuned LLaMA-2 7B model on dataset collected from our GoatChat app.

Links to our model

Overview

We're thrilled to announce the release of our GOAT-7B-Community model, a state-of-the-art 7B language model! Our innovative model is the result of fine-tuning Meta's LLaMA v2 7B using our unique, finely-grained dataset gathered from our application, GoatChat.

The concept of 'alignment' is very central in the development of large language models (LLMs). It refers to the idea that a model might refuse to respond to certain queries if it deems them to be immoral or illegal based on its training. While alignment plays a critical role in responsible AI use, it presents unique challenges when it comes to optimizing our models.

We've observed that responses generated by alignment often lack the specific information users are seeking. Instead, these responses tend to be more passive, showing a reluctance to provide detailed answers. Because our aim is to develop a robust model that can respond to queries informatively and comprehensively, it's crucial to tackle this.

Interestingly, we've noticed that not all prompts filtered out by alignment are inappropriate. As a result, alignment can sometimes lead to the removal of a significant portion of our dataset. In our case, this amounted to about a third of the total data.

Recognizing this, we've pioneered a novel method of dataset cleaning to address these issues. Moreover, to fully understand the impact of aligned responses on our model's performance, we conducted a controlled experiment.

When it comes to measuring the performance of our model, we're proud to share that we've outperformed most existing open-source 7B language models in two of the most renowned benchmarks in the field - MMLU and BBH. We sourced our evaluation results from the InstructEval leaderboard and LMSYS. In cases where the scores differed (which was often the case), we opted to rely on the scores from InstructEval over those from LMSYS.

How we trained

The backbone of our deep learning computations was a high-performance node furnished with eight A100 NVIDIA GPUs.

We adopted a floating-point format called bfloat16 and the DeepSpeed ZeRO-3 optimization for the training methodology. We ran the models for three consecutive epochs, preserving the model's state at each half-epoch as a checkpoint. However, empirical observation revealed a quality degradation when running beyond a single epoch. As a result, we revised our approach, limiting our training to one epoch with a checkpoint at the half-epoch mark.

In addressing the significant demand for memory in the training process, we integrated two crucial techniques: x-formers and gradient checkpointing. Both were pivotal in managing the computation and memory trade-off. X-formers were used for model compression, while gradient checkpointing allowed us to save only a subset of the intermediate activations in the forward pass, thereby reducing memory usage.

The effective batch size during training held a crucial role in our process. For our 7B models, we set this at 512. However, for larger models like QLoRA of 65B and 70B, we significantly reduced the effective batch size to 32, accounting for the increased computational demand of these models.

Our optimization algorithm of choice was the AdamW optimizer, with its learning rate set to 1e-4. The hyperparameters, betas, were configured to (0.9, 0.999), allowing the optimizer to adapt the learning rate based on the gradient's moving averages. Furthermore, we set the warmup steps to approximately 7% of the total training steps. This strategy aided in stabilizing the training and reducing early divergences.

Limitations

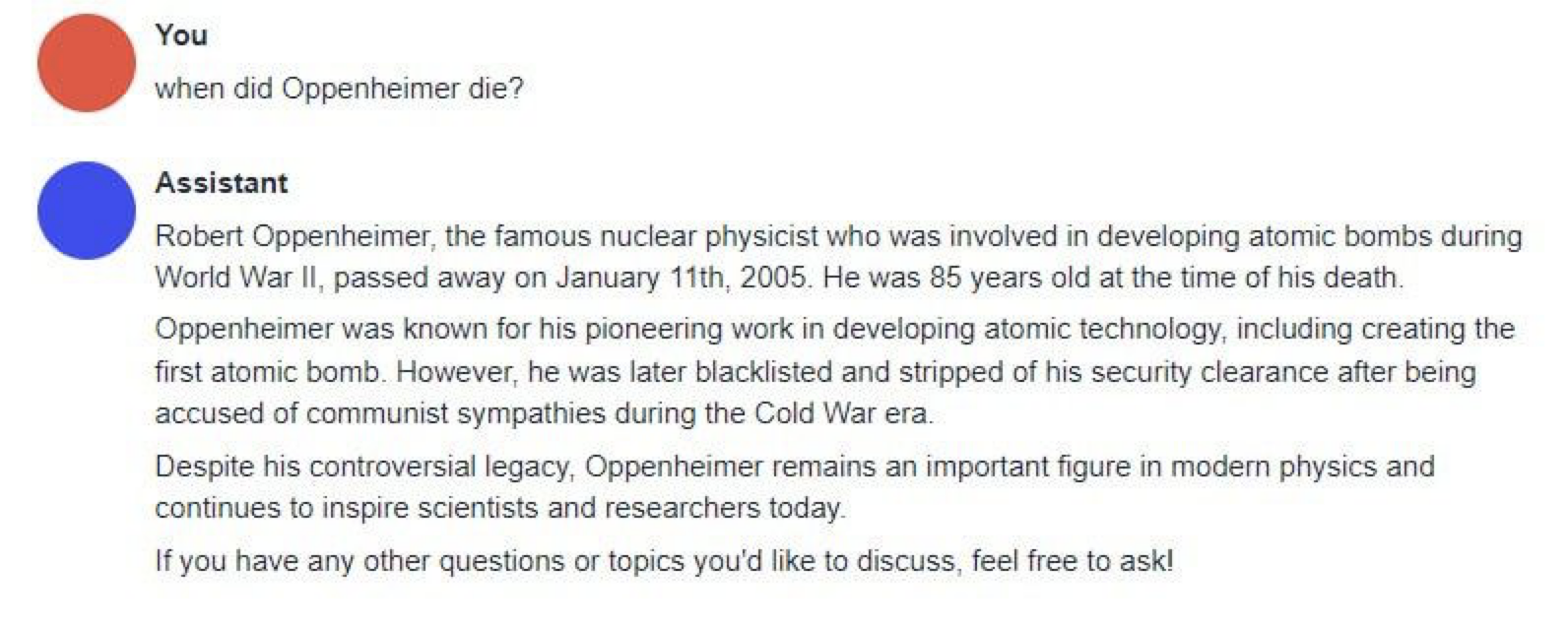

Although our model demonstrates strong reasoning capabilities, the problems arising from the scale of our model (7B models are considered to be a "small" LLM) continue to exist. The most apparent type is hallucination.

It's not about seeing things that aren't there, but rather about AI language models responding with incorrect information as if it were true. These 'hallucinations' present a persistent challenge in the development and refinement of LLMs.

Here's how it works: despite being trained on a vast array of accurate and high-quality data, all LLMs exhibit hallucination to certain extent. It's as if these AI models sometimes create their own realities, delivering responses that might sound plausible but are, in fact, untrue. While the extent of this hallucination varies among different models, it's a universal issue we encounter in LLM research.

Tackling the issue of hallucinations is an ongoing challenge, and it's a priority in our AI research. Our ultimate goal is to create models that generate coherent and grammatically correct responses and ensure the factual accuracy of the information provided.

Note that smaller models tend to hallucinate more than larger models, and our model is no exception.

Key Insights

- Our model is one of the best open-source 7B model

- Diversity and quality of data is the key in achieving high scores in MMLU

- Our 7B model's performance is on par with existing 13B models

- Limitations due to the size remains

Whats next:

Looking to the next few months, we have several exciting projects in the pipeline that we believe will take our AI research to new heights.

Firstly, we are in the process of crafting a scientific paper that delves into our fresh findings on how different dataset processing and collection methods can substantially enhance a model's reasoning abilities. We've discovered that how we curate and process our data substantially impacts the success of supervised instruction fine-tuning. The insights we've gleaned could be pivotal in advancing the field of AI, and we're eager to share them with the broader community.

Additionally, we are setting our sights on even more ambitious goals in the realm of deep learning. We're already developing larger LLaMA v2 models, specifically the 13B and 70B variants. These grand-scale models will allow us to experiment further and push the boundaries of what's currently possible in AI modeling.

Our journey into deep learning research and model training is just beginning. We're fully committed to researching all the critical challenges around LLMs and the AI Twin technologies, aiming to unlock extraordinary potential of reinforcement learning from human feedback (RLHF). Keep an eye on our blog for updates on these exciting developments!

Acknowledgements:

Our works would not be possible without hundreds of papers published before us, all credits to hard-working researchers. We thank Meta AI Research for training and open-sourcing the LLaMA-2 models; this massive contribution to the AI community is incredible. Also, many thanks to Hugging Face for the infrastructure and OpenAI for pioneering the AGI movement worldwide.

*Disclaimer:

GOAT-7B Community model is for research purposes only.